BOOM! I was on the final 15km of an extremely hot bike ride when things suddenly spiraled out of control. A grey piece of metal in the middle of the road had pierced my front tire and led to a forceful explosion. Luckily, I was able to avoid a crash. Here I was in the middle of a New Jersey forest on one of the hottest days of the year. Cell phone reception? Forget it. What to do? A new inner tube would have poked through and gotten pierced shortly after. Time to McGuyver! Two dollar bills neatly folded into the tire carcass helped keep the new inner tube somewhat protected. It wasn’t a pretty solution and the tire wobbled along. But it held up for 15km and got me home safely.

McGuyvering

The classic 80s TV show MacGuyver was an international sensation and it helped coin the term McGuyvering. Its main character MacGuyver always managed to get out of hopeless situations by building ingeniuous contraptions out of ordinary things. According to the Oxford Dictionary, McGuyvering is to make or repair something “in an improvised or inventive way, making use of whatever items are at hand”. Think about the band-aid holding your broken glasses together. Think a combo of plastic bag and duct tape to fix a whole in your tent. While resourceful, McGuyvered solution are usually short-lived and not intended for long-term use.

The modern data stack?

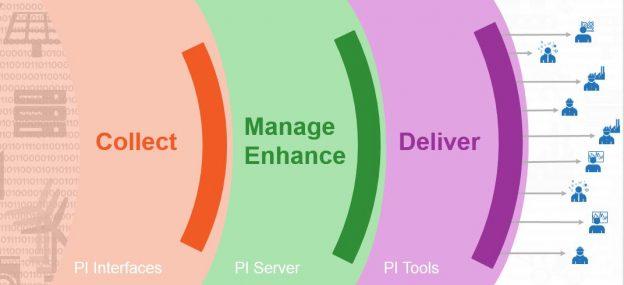

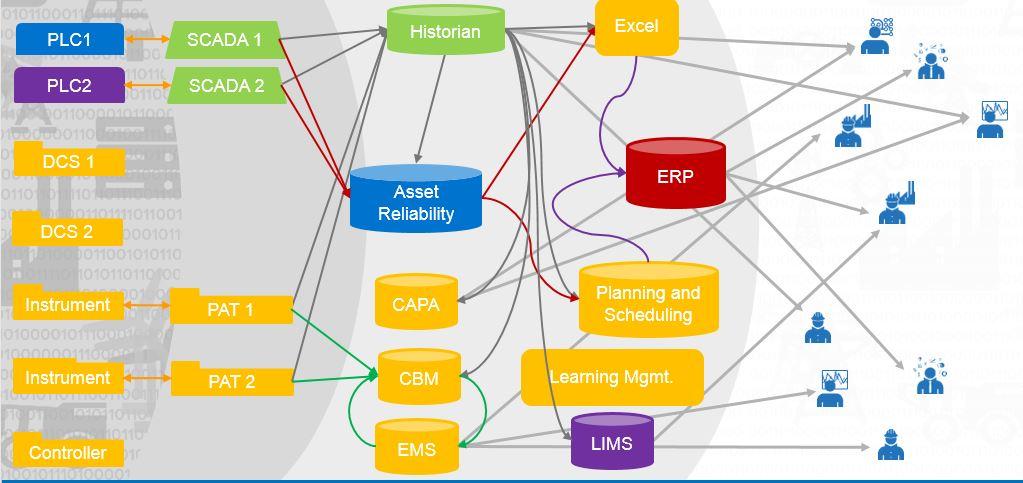

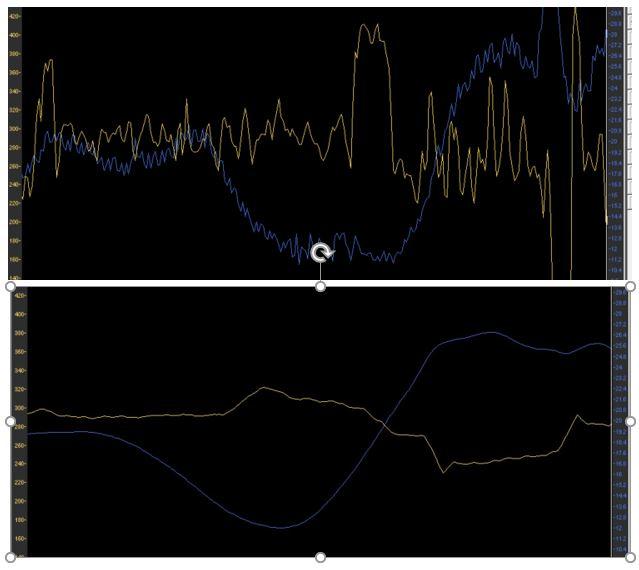

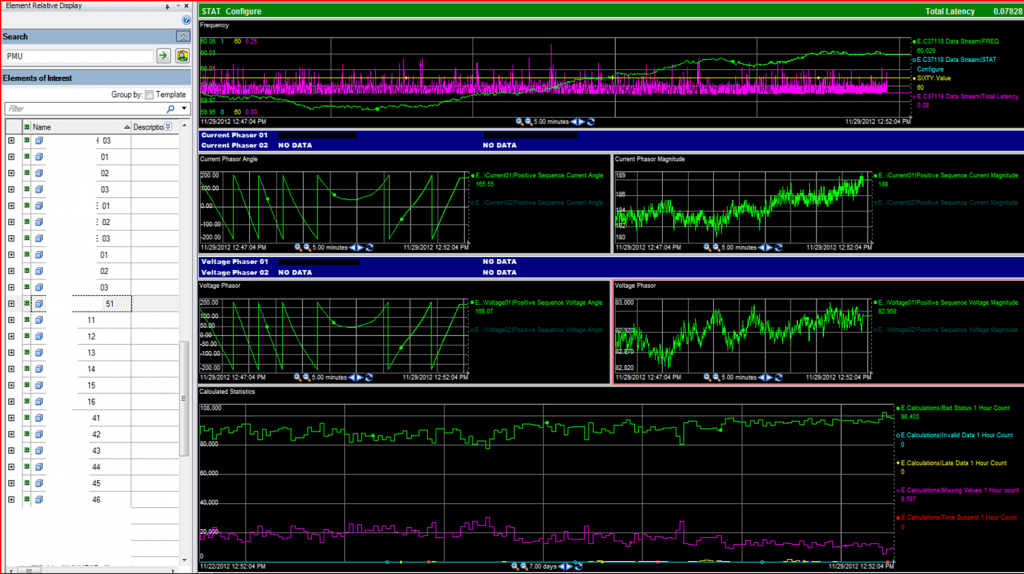

What does the have to do with data? Over the course of my career I have witnessed many cases where organizations McGuyver solutions: combining different software solutions and methodologies to achieve a specific goal. It usually goes ok for some time but sooner or later something will spin out of control. The same thing is currently happening in the data warehousing space. There are a ton of spot solutions on the market that take care of a subset of tasks that need to be taken care of during the whole lifecycle of a cloud data warehouse. Some people call the set of these tools the ‘modern data stack’. No doubt that these solutions do a great job for their respective space. But when you use a concoction of disparate instruments that were never designed to work together, you might end up with not with a modern data stack but with a modern case of McGuyvering, instead.

Sure enough, macros, scripts and work instructions then help keep things in working order for some time. But as soon as complexities increase, critical team members leave or upgrades kill fragile integration points, things can easily get out of control. You quickly end up losing valuable time, making compromises along the way and spending time fixing instead of building. And we haven’t even started talking about training people in all these different tools…. Is it really worth cutting corners in these volatile & exciting times?

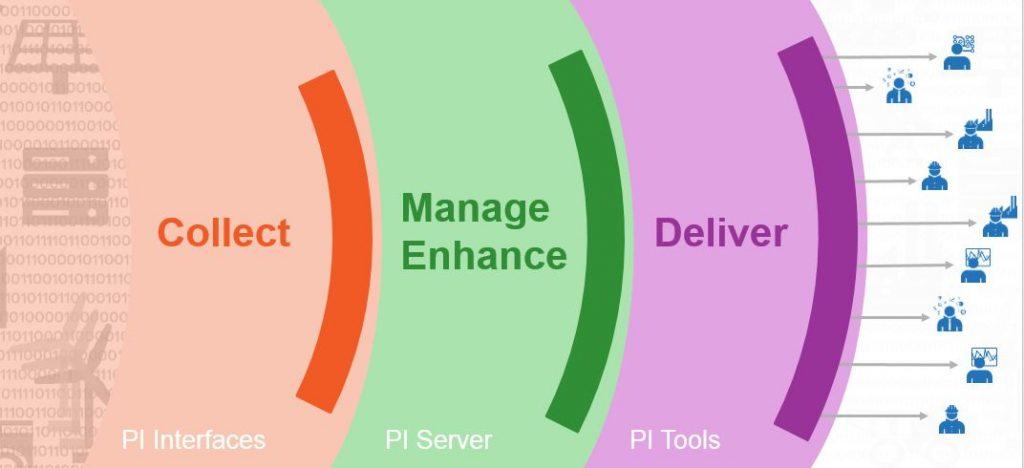

Enter: Agile Data Engine

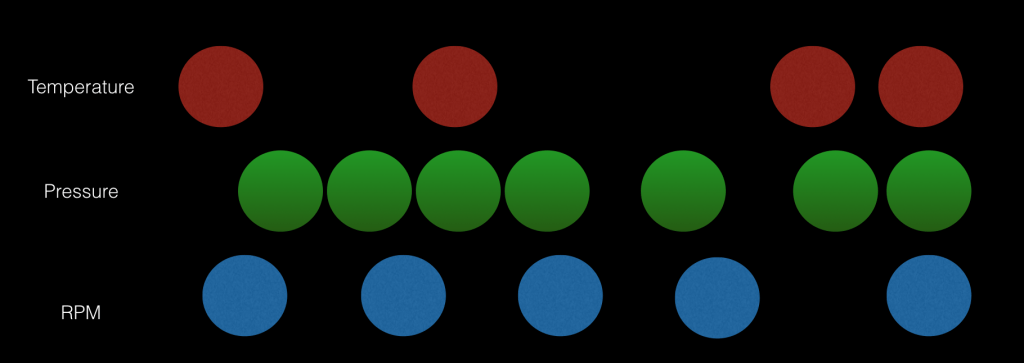

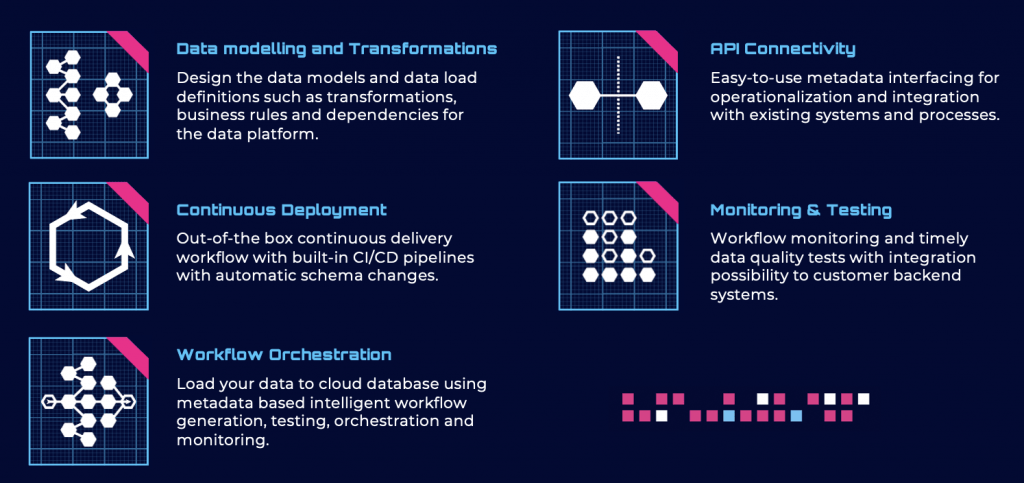

I recently joined Helsinki based Agile Data Engine. Back in 2016, our fabulous team had the vision to build a single platform to fully automate the design, build & operations processes for data warehouses. No McGuyvering required – just a single product. Fast forward to 2023 and over 40 customers in Scandinavia happily rely on the Agile Data Engine platform to help them deliver ever evolving and stable cloud data warehouses. Customers love the fact that they can do most of their work in a single environment that just works. A full array of functionality allows them to move towards a truly agile development & operation process. Does it work? Our customers enthusiastically say ‘yes!’. Some of them are now able to make up to 250 deployments per months while dramatically reducing the number of errors.

Zero hero

And best of all: the reliance on the MacGuyvers on your team – those smart people who can’t ever go on vacation because the stability of the processes is reliant on their domain knowledge has greatly been reduced. And what do the MacGuyvers think about this? They love it! No more wake-up calls in the middle of the night or the weekend when workflows fail. Instead, they can focus on what they do best: engineer and deliver great data products to their stakeholders. We call this the ‘Zero Hero’ approach.

Are you McGuyvering your cloud data warehouse? More about Agile Data Engine and what we do in later blog posts.