Tag: big data

-

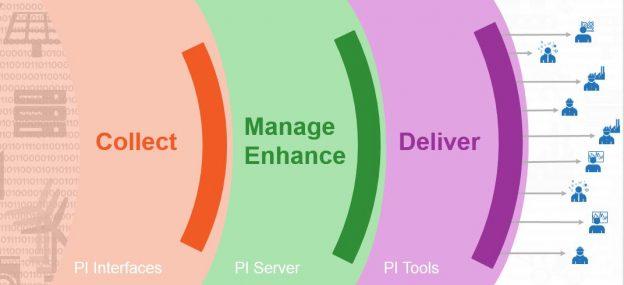

What is the OSIsoft PI System?

The OSIsoft PI System In the last two blog posts, I spoke about Industry 4.0 and the challenges around working with industrial sensor data. Let me attempt to quickyl summarize the outlined problems: Industry 4.0 initiatives require a ton of time-series data. Acquiring, managing and analyzing this can be extremely challenging. This is where the…

-

Industry 4.0 and the sensor data analytics problem

That sensor data problem A few weeks ago, I met with a number of IT consultants who had been hired to provide data science knowledge for an Industry 4.0 project at a large German industrial company. The day I saw them they looked frazzled and frustrated. At the beginning of our meeting they spoke about…

-

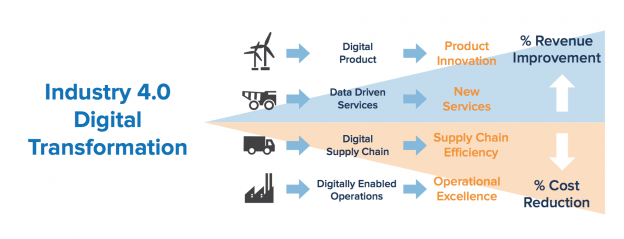

Industry 4.0 & Big Data

Industry 4.0 If you work in a manufacturing related industry, it’s difficult to escape the ideas and concepts of Industry 4.0. A brainchild of the German government, Industry 4.0 is a framework that is intended to revolutionize the manufacturing world. Similar to what the steam engine did for us earlier in the last century, smart usage…

-

Big Data – Can’t ignore it?

Big data 2012 is almost over and I just realized that I have not yet posted a single entry about big data. Clearly a big mistake – right? Let’s see: Software vendors, media and industry analysts are all over the topic. If you listen to some of the messages, it seems that big data will…

-

Visual Analytics – The new frontier? (Guest Post)

WHAT IS VISUAL ANALYTICS – BY DR JOERN KOHLHAMMER Massive sets of data are collected and stored in many areas today. As the volumes of data available to business people or scientists increase, it becomes harder and harder to use the data effectively. Keeping up to date with the flood of data using standard tools…